Are You Talking to a Real Person Online?

Have you ever wondered if the person you’ve been chatting to online is real? I have some bad news for you, but a tad good news for science. The good news is that artificial intelligence has gone one level up that opens numerous possibilities for positive scientific advancement. The bad news, however, is that you may have been talking to a bot online.

Bots plague the online world. A few months ago, I’ve written a piece on LinkedIn on spammers and how to spot them. However, LinkedIn is now filled with more serious bots, operating in a systemic routine and infiltrating the markets with more concerning agendas. Of course, there are still relatively genuine profiles and interactions in social media. I, for one, witnessed a lot of relationships come to fruition from Facebook, and of course, business partnerships from LinkedIn. However, how bad is it to converse/collaborate with a bot?

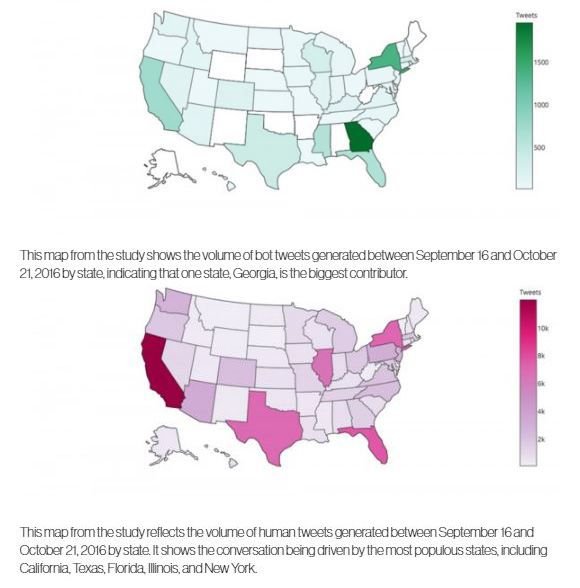

A study was published before the U.S. elections this year that 400,000 bots have been released on Twitter, tweeting, being retweeted, and producing election-related messages at an exponential rate – about 20% of messages with political agenda in fact. These bots are highly influential, and according to Alessandro Bessi and Emilio Ferrara of the University of Southern California Information Sciences Institute, these bots are capable of distorting the online debate.

Measuring the connections an account has to other accounts and how many different users retweet that account, people are deemed unable to determine whether a source they encounter is a human or a bot. The retweet rate of bots are the same as humans, and thus, with bots generating more tweets, they become retweeted more often and become incredibly significant. Of course, there are serious implications such as misinformation, rumor mongering, conspiracy theories, etc.

It doesn’t end there. These bots utilise the powers of artificial intelligence to chat with people. Yes, they have the ability to clone the behaviour of people by polarising sentiments and generating an appropriate strategic response that pushes their agenda. Add the ability to post 1,000 tweets per hour, and it’s guaranteed to manipulate the Twitterverse.

According to the study, 75% of the bots found were supportive of the Republican candidate Donald Trump. The bots supporting Trump are really producing a large amount of positive support for the candidate, while for Clinton supporters, more tweets are neutral than positive.

A few years ago, AI has more humanely practical uses meant for making the quality of life more efficient and even for entertainment – Oliverbot:

Now, AI is being used to drive political motives. Should the knowledge of this fall in the wrong minds, it can cause drastic and tragic consequences for the human race.

Image and Study – http://firstmonday.org/ojs/index.php/fm/article/view/7090/5653

Previous Post

Previous Post Next Post

Next Post